Learning From 3.8 Billion Years of R&D

I've been thinking a lot recently about the difference between "solving a problem" and "optimizing a solution." There is a subtle difference, and I think the history of the Japanese rail system illustrates it perfectly.

The Sonic Boom

In the late 1980s, the engineers building Japan's bullet train had a problem. The train could hit 300 kilometers per hour, no problem. But every time it entered a tunnel at high speed, it would push a massive wave of air ahead of it. When that air burst out the other end, it created a sonic boom loud enough to rattle windows 400 meters away. Japan has strict noise laws. This was a dealbreaker.

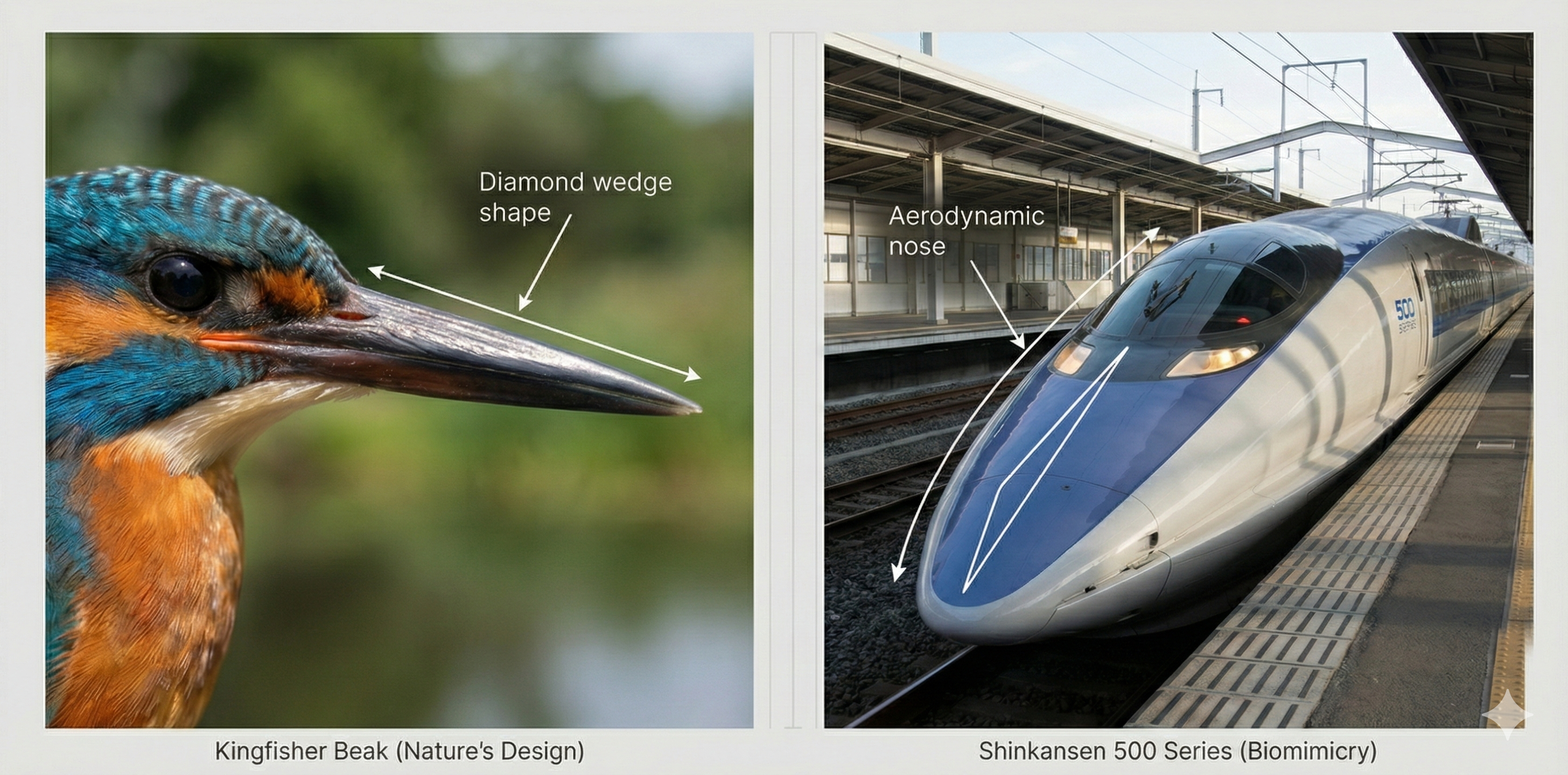

The fix came from an unexpected place. The General Manager, Eiji Nakatsu, happened to be a birdwatcher. He'd spent time observing Kingfishers, which dive from air into water at high speed without making a splash. Their beaks are shaped like a diamond wedge. Instead of slamming into the water, they slip through it.[1]

So Nakatsu redesigned the train's nose to mimic the bird. The boom vanished. But that's where it gets interesting. The new design also cut air resistance by 30%, let the train run 10% faster, and dropped energy consumption by 15%.[2] They solved one problem and accidentally unlocked three more improvements just by copying something evolution had already figured out.

A History of Theft

This happens all the time. We get stuck on some engineering challenge, and the solution turns out to be something nature solved ages ago.

Flight: The Wright Brothers spent hours watching buzzards on the dunes of North Carolina. They noticed something interesting. When birds turned, they didn't bank their whole body like a car. They twisted just the tips of their wings. That became "wing-warping," which evolved into the ailerons you see on every airplane today.[3]

Velcro: In 1941, a Swiss engineer named George de Mestral went hiking and came back with his pants covered in burrs. Annoyed, he put one under a microscope. Tiny hooks, thousands of them, grabbing onto fabric loops. He spent eight years turning that into Velcro.[4]

Nature's been prototyping for 3.8 billion years. Most of the time, when we're stuck, the answer's already out there.

The AI Exception

This is what frustrates me about the current state of Artificial Intelligence. We seem to have stopped looking at the source material.

We are building the "pre-Kingfisher" train. Modern Large Language Models (LLMs) are miracles of engineering, but they are brute-force solutions. We achieve intelligence by stacking massive amounts of compute, burning megawatts of energy, and feeding models more data than a human could read in a thousand lifetimes. We are solving the problem by pushing harder against the air.

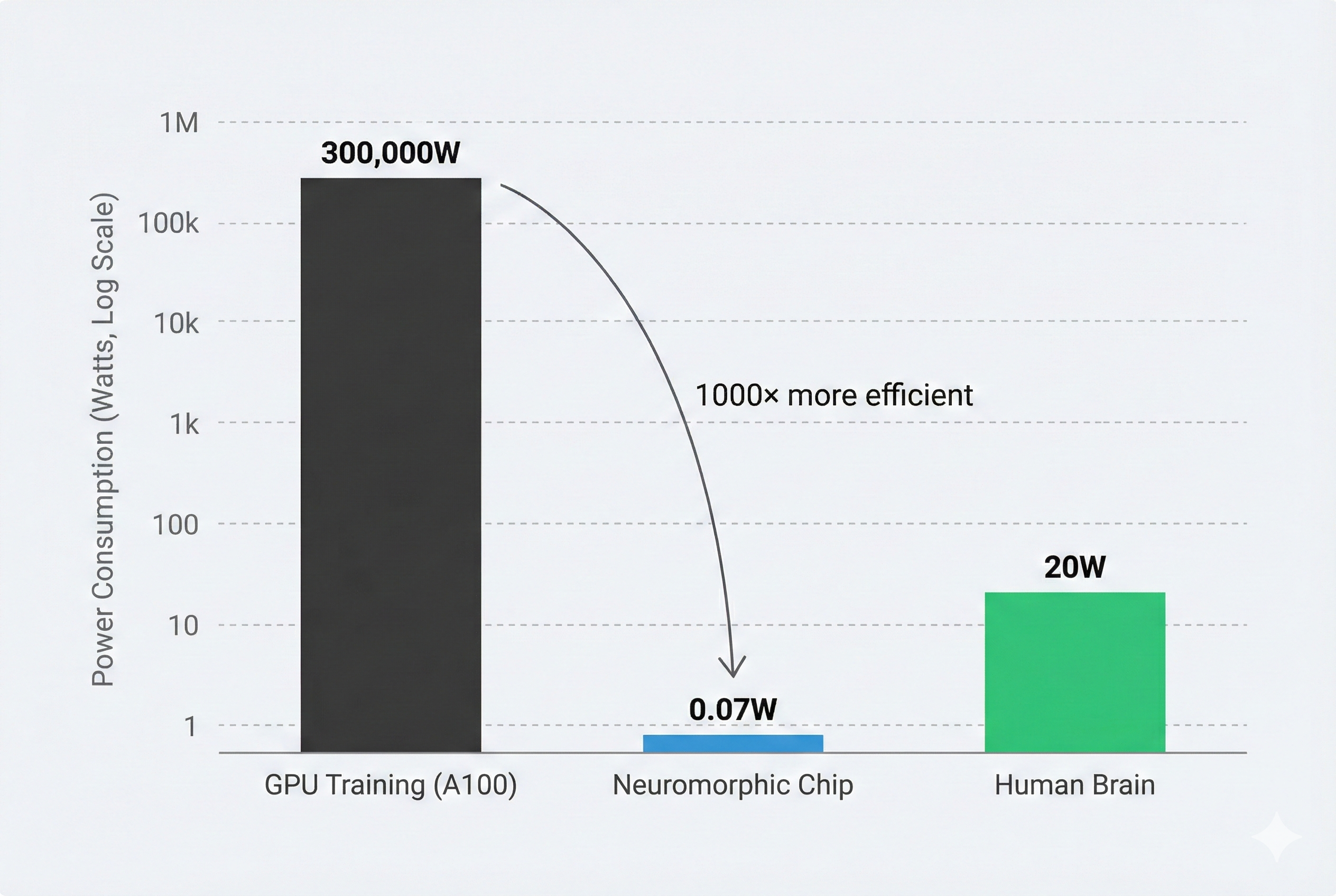

Compare that to the human brain. It runs on roughly 20 watts[5], about the same as a dim lightbulb. Yet it learns continuously, understands 3D space, and navigates social nuance without needing to read the entire internet first.

How Does It Do That?

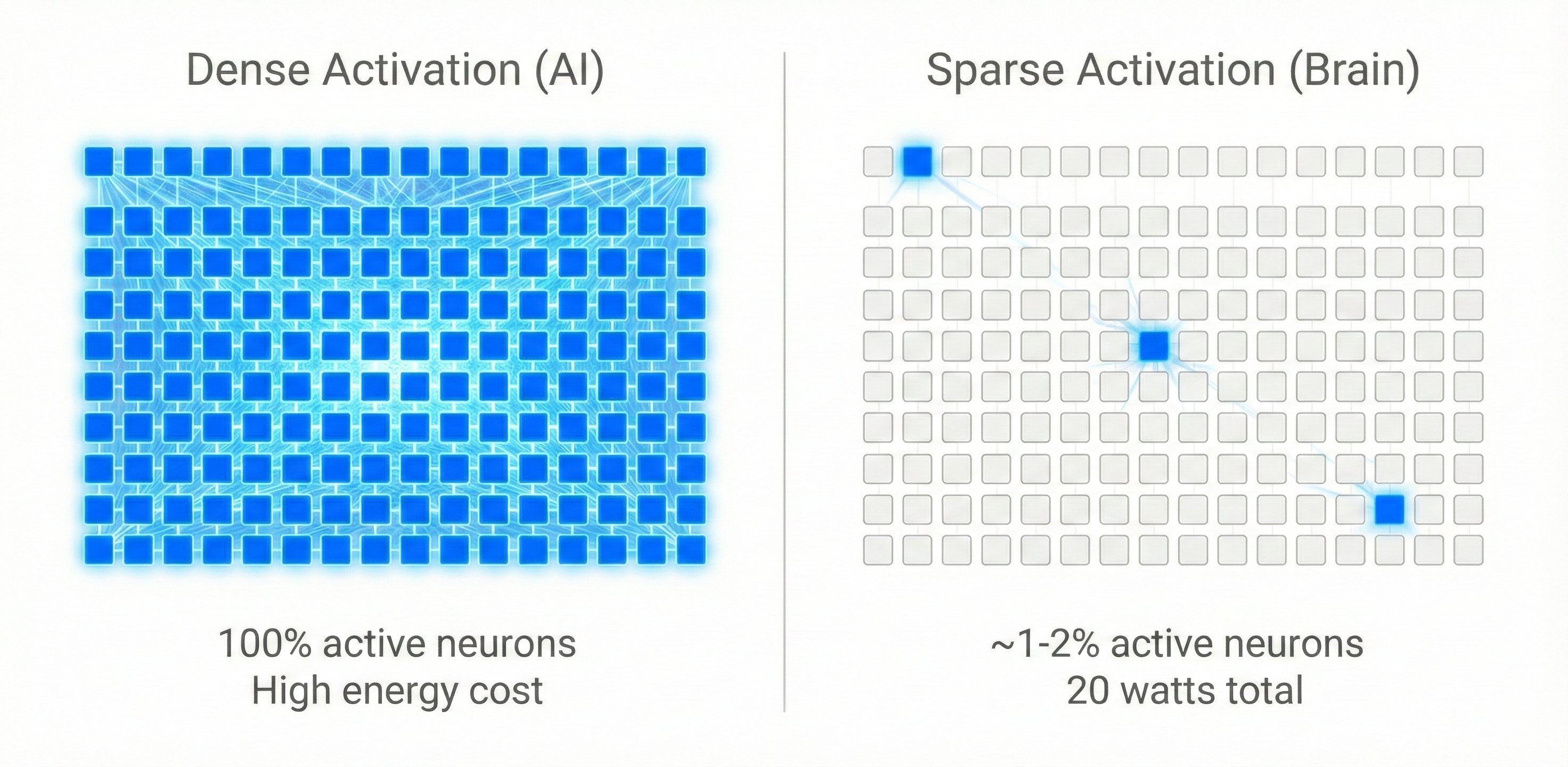

Let's be specific about what those 20 watts are buying you. Your visual cortex alone contains roughly 140 million neurons. At any given moment, when you're looking at this screen, only about 1-2% of those neurons are firing.[6] This is called sparse coding, and it's brutally efficient.

The rest aren't "off." They're being actively suppressed through lateral inhibition.[7] Neurons that aren't relevant to the current task are silenced by their neighbors. This means the brain isn't doing 140 million computations; it's doing maybe 2 million, but it's doing exactly the right 2 million.

Compare this to a transformer model. GPT-4 has roughly 1.76 trillion parameters.[8] When you ask it a question, it activates all of them. Every single weight gets multiplied. There's no concept of "this part isn't relevant, skip it." The entire network lights up for every token.

Olshausen and Field showed this in their seminal 1996 paper on sparse coding: the brain learns representations that are minimally active while still being maximally informative.[9] Recent work on Mixture of Experts (MoE) architectures is finally trying to mimic this, routing inputs to specialized sub-networks instead of the whole model. But we're still burning orders of magnitude more energy than biology.

And then there's plasticity. Your brain is learning right now, as you read this, without forgetting how to ride a bike or what your childhood home looked like. Neural networks catastrophically forget. Train a model on Task A, then Task B, and it will obliterate its knowledge of Task A unless you carefully engineer around it (replay buffers, elastic weight consolidation, etc.).

The brain solves this through complementary learning systems: the hippocampus rapidly encodes new memories, while the cortex slowly integrates them without disrupting old knowledge. We've known about this since McClelland's work in the 90s,[10] but production AI largely ignores it because it's architecturally inconvenient.

The Honest Part

Here's where I need to be careful. I just spent several paragraphs talking about what the brain does better than AI. But the truth is, we don't actually know how most of it works.

We can measure sparse firing. We can see lateral inhibition happening. We know the hippocampus is involved in memory formation. But the mechanisms? The actual algorithms the brain is running? We're mostly guessing.

We don't know how the cortex does credit assignment without backpropagation. We don't know how neurons coordinate timing across millions of cells. We don't fully understand how the brain binds information across different regions into coherent thoughts. Hell, we still argue about what consciousness even is.

So there's a real risk here. You can't just say "copy the brain" when you don't understand what you're copying. That's cargo cult engineering. Building a wooden control tower and expecting planes to land.

But here's the thing: we don't need to understand everything. The Wright Brothers didn't need a PhD in avian biology. They just needed to notice that twisting wings creates control. Nakatsu didn't need to model fluid dynamics at the molecular level. He just needed to see that a certain shape cuts through pressure transitions cleanly.

The principles matter more than the mechanisms. Sparsity is valuable whether or not we understand exactly how lateral inhibition implements it. Energy efficiency is worth pursuing even if we can't fully replicate dendritic computation. Continuous learning without catastrophic forgetting is clearly possible in biological systems, so it's worth figuring out, even if our first attempts look nothing like the hippocampus.

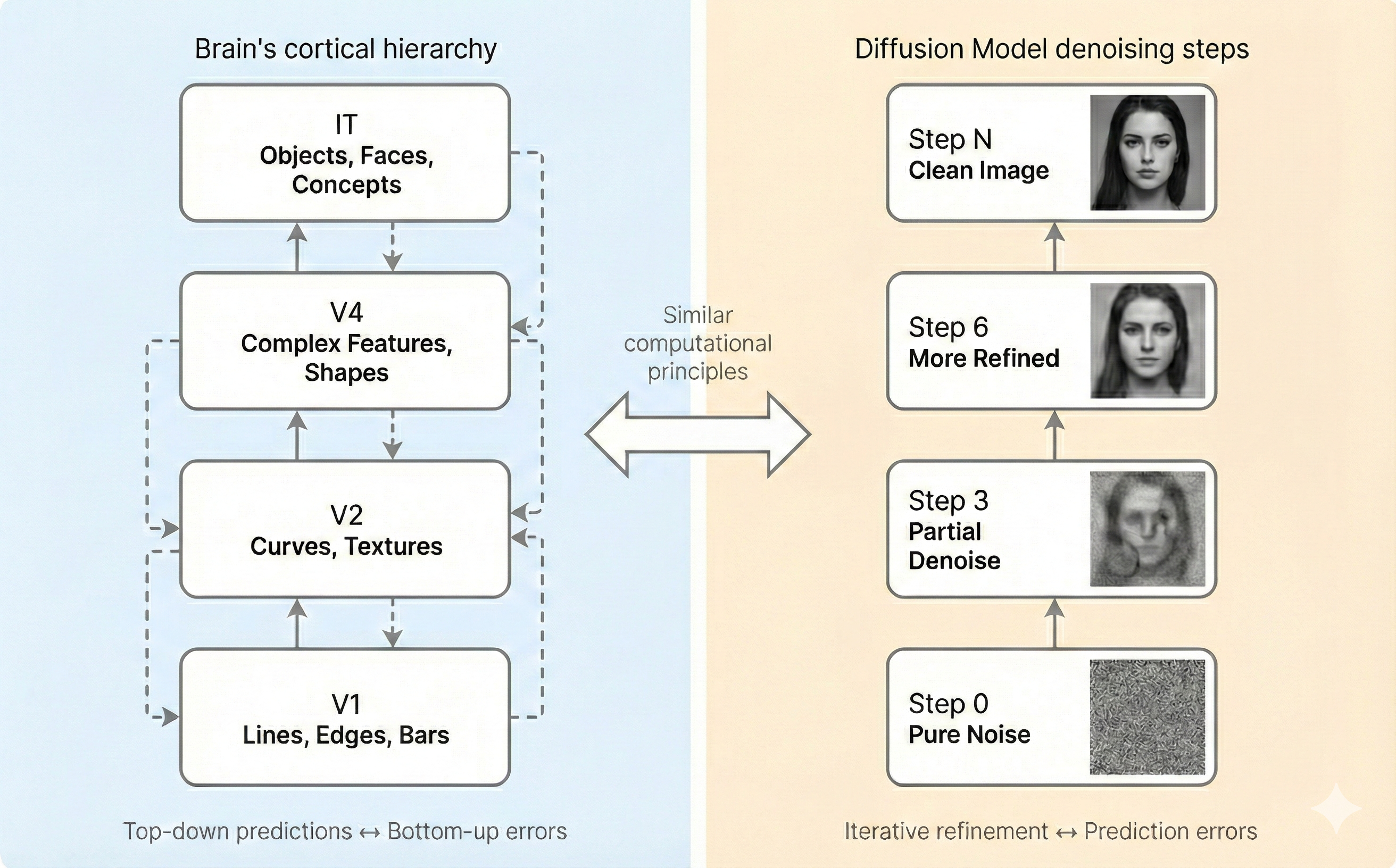

And here's the part people miss: it's a feedback loop. We build models inspired by the brain. Those models behave in unexpected ways. Those behaviors give us new hypotheses about how the brain might actually work. We test those hypotheses in neuroscience. That informs the next round of models. This is already happening. Convolutional neural networks, originally inspired by the visual cortex, have taught us things about how the visual cortex processes hierarchical features that we didn't know before. Attention mechanisms in transformers have sparked new questions about how biological attention actually works.

We're not trying to build a brain. We're trying to build something that works as well as a brain does, using constraints that biology had to respect: limited energy, limited space, real-time learning. And in doing that, we might actually understand both systems better.

What's Actually Being Done

I don't want to sound like I'm just complaining. There are people working on this.

Numenta, founded by Palm Pilot creator Jeff Hawkins, has spent two decades trying to reverse-engineer the neocortex. Their Hierarchical Temporal Memory (HTM) framework mimics cortical columns, the repeating vertical structures in your brain's gray matter.[11] It's sparse, it's temporal, and it learns continuously. Their newer Thousand Brains Project (Monty) takes this further, implementing Hawkins' theory that every cortical column builds its own model of the world through sensorimotor learning, then votes with others to reach consensus.[18] But these approaches are still slow and hard to scale, which is why you don't see them winning ImageNet competitions.

Intel's Loihi chip[12] and IBM's TrueNorth[13] are neuromorphic processors, hardware designed to run spiking neural networks efficiently. Unlike GPUs that multiply matrices in lockstep, these chips operate asynchronously, firing neurons only when needed, like biology. Loihi can run certain tasks on 1/1000th the power of a conventional processor. But the software ecosystem isn't there yet. Training spiking networks is still an open problem.

And the progress is accelerating. In early 2025, researchers demonstrated a Hierarchical Reasoning Model (HRM) with just 27 million parameters (tiny by modern standards) that achieved 40.3% on the notoriously difficult ARC-AGI benchmark, outperforming models orders of magnitude larger.[14] What's remarkable isn't just the size; it's that it was trained on only 1,000 examples (not billions), uses no pre-training, and solves complex reasoning tasks that stump large language models. The architecture mimics cortical hierarchy: high-level abstract processing combined with low-level detail processing at different timescales, just like theta and gamma rhythms in the brain. When the researchers measured its internal dimensionality structure, it matched the dimensional hierarchy found in mouse cortex.

On the energy front, recent work on spiking neural networks for large language models achieved 69.15% sparsity through event-driven computation, meaning roughly 70% of the network stays silent at any given time, just like biological sparse coding.[15] The result: 97.7% energy reduction compared to standard computation and a >100× speedup for processing long sequences. They trained it with less than 2% of the data typical LLMs require. This isn't a toy model running on a research cluster. It was trained on hundreds of GPUs and achieves competitive performance on real benchmarks.

There's also recent work on biologically plausible learning rules. Backpropagation (the algorithm that trains every major neural network) is almost certainly not how the brain learns. It requires symmetric weights, global error signals, and separate forward/backward passes. None of that makes biological sense.

Alternatives like predictive coding[16] (your brain is constantly predicting what comes next and learning from prediction errors) and equilibrium propagation[17] (learning by nudging a network toward equilibrium states) are gaining traction. These aren't just academically interesting. They're potentially more energy-efficient because they don't require storing activations for backprop.

But here's the problem: all of this is niche. The mainstream conversation is still "how do we make transformers bigger?" because that's what's economically rewarded right now.

Why I'm Betting on Biology

The brain achieves this efficiency through mechanisms we largely ignore in production AI: sparsity (only using a few neurons at a time) and plasticity (learning without overwriting old data). These aren't just optimizations. They're fundamental to how intelligence works in the physical world.

I believe the curve of "just add more compute" is going to flatten. Not because we'll run out of compute, but because the returns will diminish. We're already seeing it. GPT-4 to GPT-5 isn't the same leap as GPT-2 to GPT-3. The low-hanging fruit is gone.

The next great leap won't come from a bigger data center. It will come from doing what the Wright Brothers and Eiji Nakatsu did: looking outside, seeing how nature solved the problem, and building that.

The question isn't whether we'll eventually build brain-like AI. Evolution already proved it's possible. The question is whether we'll learn the lesson before we've built a thousand more power plants to feed the current approach, or whether we'll keep pushing harder against the air until the sonic boom becomes unbearable.